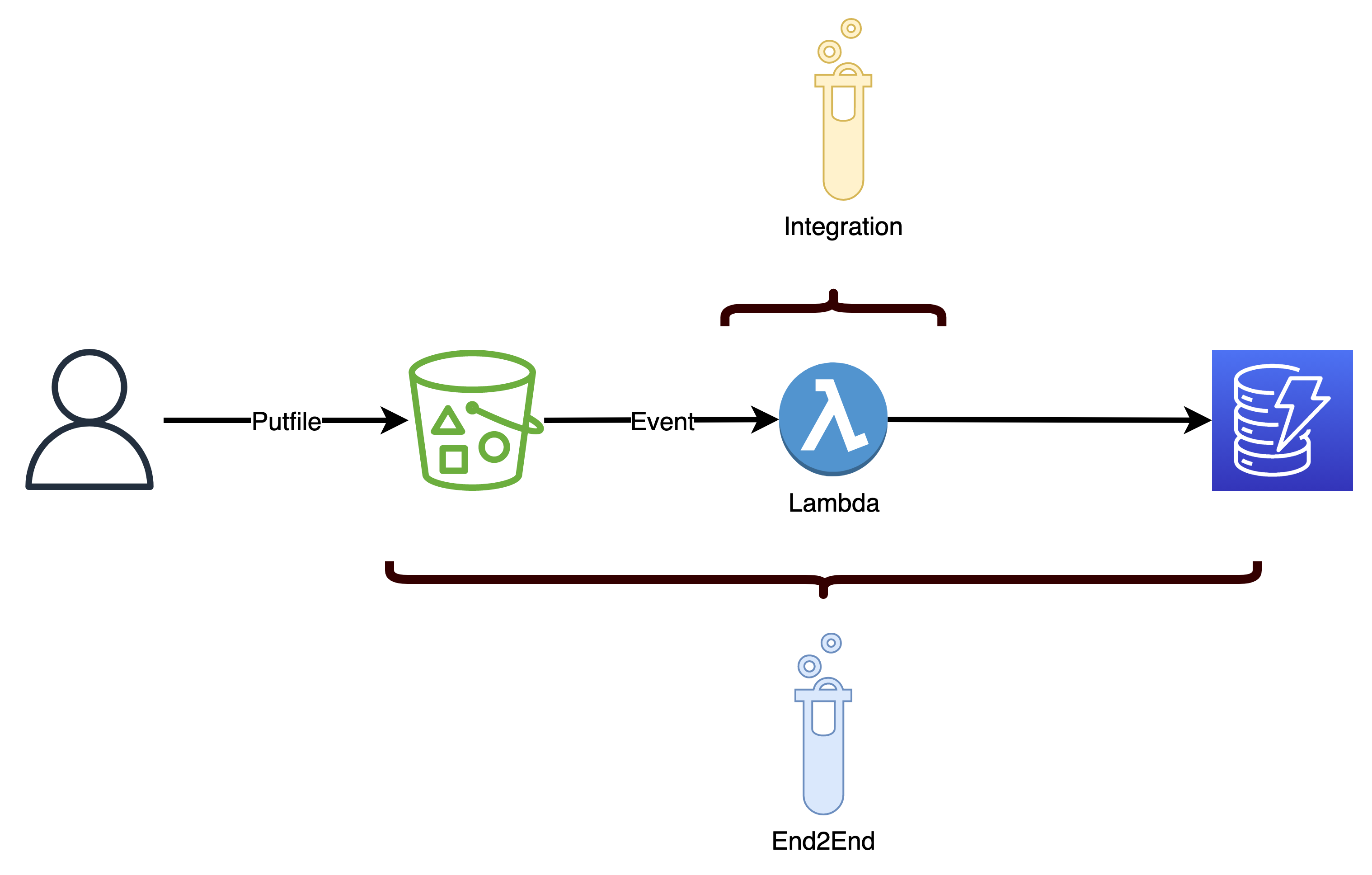

Integration/End2End Test of the Application

Now we can invoke the lambda itself with a self generated event. This is the integration test.

When we start the Test with the event from “outside”, that means putting an object in the bucket, we test the whole application end2end. And because this end2end Test uses the *application which runs on the infrastructure, there is only one end2end test scenario per usecase, not two.

Integration test of the lambda function

I have not coded this example in go, maybe you try to automate it yourself.

The steps are shown in the Taskfile.yml. So when you call task itest, all steps are shown.

- Delete item in Table

- Check whether the item is deleted

- Call lambda with test event test/put.json

- Wait

- Get item from table

- Manualle check the results.

You can try it on the AWS CLI with:

aws lambda invoke --function-name {{.FN}} --payload fileb://./test/put.json test/result.json

Where FN is the function name.

Or check the wholy cycle with the cloned go-on-aws-source repository:

cd architectures/serverless/app

task itest

Gives the output:

task: [itest] aws dynamodb delete-item --table-name dsl-items07D08F4B-S4BK34JCHWDO --key file://testdata/key.json

task: [itest] aws dynamodb get-item --table-name dsl-items07D08F4B-S4BK34JCHWDO --key file://testdata/key.json

task: [itest] time aws lambda invoke --function-name logincomingobject --payload fileb://./test/put.json test/result.json

{

"StatusCode": 200,

"ExecutedVersion": "$LATEST"

}

real 0m1.037s

user 0m0.000s

sys 0m0.000s

task: [itest] date

Sa 18 Dez 2021 16:20:26 CET

task: [itest] sleep 5

task: [itest] aws dynamodb get-item --table-name dsl-items07D08F4B-S4BK34JCHWDO --key file://testdata/key.json

{

"Item": {

"itemID": {

"S": "my2etestkey.txt"

},

"time": {

"S": "2021-12-18 15:20:26.630121344 +0000 UTC m=+0.052973681"

}

}

}

You could use LambCI or AWS SAM to invoke lambda on you laptop on a docker container. The arguments to do that are usually, that debugging with single steps are easier that way.

I prefer to debug unit test, which also gives you the debugging possibility and to invoke physical Lambda functions and some more:

- You also test the IAM rights of the lambda function

- The function is deployed, you test the configuration

- With CDK and other frameworks deployment is easy

- you do not need docker locally

- you also get the timing to rightsize the Lambda memory size

End2End Test

Here you have to do a clean setup and teardown of the database, so the steps are:

- Delete testitem(s) in database

- Check that the testitem does not appear in the database

- Put the file in S3

- Wait

- Check Test-Item in Table

- Delete testitem in table

The steps are coded in architectures/serverless/app/integration_test.go in the test TestAppInvokeLambdaWithEvent.

1) Delete testitem

58 var itemID = "my2etestkey.txt"

59

60 key := map[string]types.AttributeValue{

61 "itemID": &types.AttributeValueMemberS{Value: itemID},

62 }

...

66 parmsDDBDelete := &dynamodb.DeleteItemInput{

67 Key: key,

68 TableName: &table,

69 }

70 t.Log("Setup - delete item")

71 _, err := ClientD.DeleteItem(context.TODO(), parmsDDBDelete)

72 assert.NilError(t, err, "Delete Item should work")

In lines 60-62 you see how to encode a DynamoDB String attribute AttributeValueMemberS.

2) Check that the testitem does not appear in the database

73 parmsDDBGet := &dynamodb.GetItemInput{

74 Key: key,

75 TableName: &table,

76 ConsistentRead: aws.Bool(true),

77 }

78 responseDDB, err := ClientD.GetItem(context.TODO(), parmsDDBGet)

79 assert.Equal(t, 0, len(responseDDB.Item), "Delete should work")

In line 79 I test that before the test the database is clean. This is the Setup of the test.

3) Put the file in S3

81 // *** Copy object to S3

82 testObjectFilename := "./testdata/dummy.txt"

83 file, err := os.Open(testObjectFilename)

84 assert.NilError(t, err, "Open file "+testObjectFilename+" should work")

85

86 defer file.Close()

87 t.Log("Copy object to S3")

88 parmsS3 := &s3.PutObjectInput{

89 Bucket: &bucket,

90 Key: &itemID,

91 Body: file,

92 }

93 _, err = ClientS.PutObject(context.TODO(), parmsS3)

94 assert.NilError(t, err, "Put Object "+itemID+" should work")

This is quite straighforward. Just call the S3 PutObject API.

4) Wait

96 t.Log("Sleep 3 seconds")

97 time.Sleep(3 * time.Second)

With this event based system you could argue, that waiting is not the right method. But with the test you don’t want to change the tested system. So if you would change the DynamoDB Table and add an trigger, you would change the tested system. When we change the tested system, this could have unwanted side-effects.

5) Check Test-Item in Table

99 t.Log("Test item on DynamoDB")

100 responseDDB, err = ClientD.GetItem(context.TODO(), parmsDDBGet)

101 assert.NilError(t, err, "Get Item should work")

102 // Item itsef with attribute time

103 assert.Equal(t, 2, len(responseDDB.Item))

Now this is the real end2end test. The system behaves as defined. Yes! Go celebrate.

export I_TEST="yes"

go test -run TestAppInvokeLambdaWithEvent -v

Should give:

=== RUN TestAppInvokeLambdaWithEvent

integration_test.go:70: Setup - delete item

integration_test.go:87: Copy object to S3

integration_test.go:96: Sleep 3 seconds

integration_test.go:99: Test item on DynamoDB

--- PASS: TestAppInvokeLambdaWithEvent (3.29s)

PASS

ok dsl 3.706s

6) Delete testitem in table

Usually you clean up after the test. But in the early phaes you want to have a look at the generated item, so no cleanup yet.

Everything tested

With all these test types you have a safety net for you app. So if you want to refactor something, all functionality is secured!

See also

Source

See the full source on github.